Out Of This World Info About Are FPGAs Better For AI

FPGAs and AI

1. Understanding the Players

Artificial intelligence is revolutionizing everything from how we shop to how doctors diagnose diseases. But powering these AI systems requires serious computational muscle. For years, CPUs (Central Processing Units) and GPUs (Graphics Processing Units) have been the workhorses. CPUs are the generalists, good at handling a variety of tasks. GPUs, originally designed for rendering graphics, are excellent at performing the same operation on massive amounts of data simultaneously — perfect for the matrix multiplications that form the backbone of many AI algorithms. But now, a new contender has entered the ring: the Field-Programmable Gate Array, or FPGA.

Think of CPUs and GPUs as pre-built computers. You write software, and they execute it. An FPGA, on the other hand, is like a blank slate. You can configure its internal hardware to perform specific tasks in a highly optimized way. It's like building a custom circuit board tailored precisely to the needs of your AI application. This flexibility comes at a cost, though. Programming FPGAs requires specialized skills and can be more complex than writing software for CPUs or GPUs.

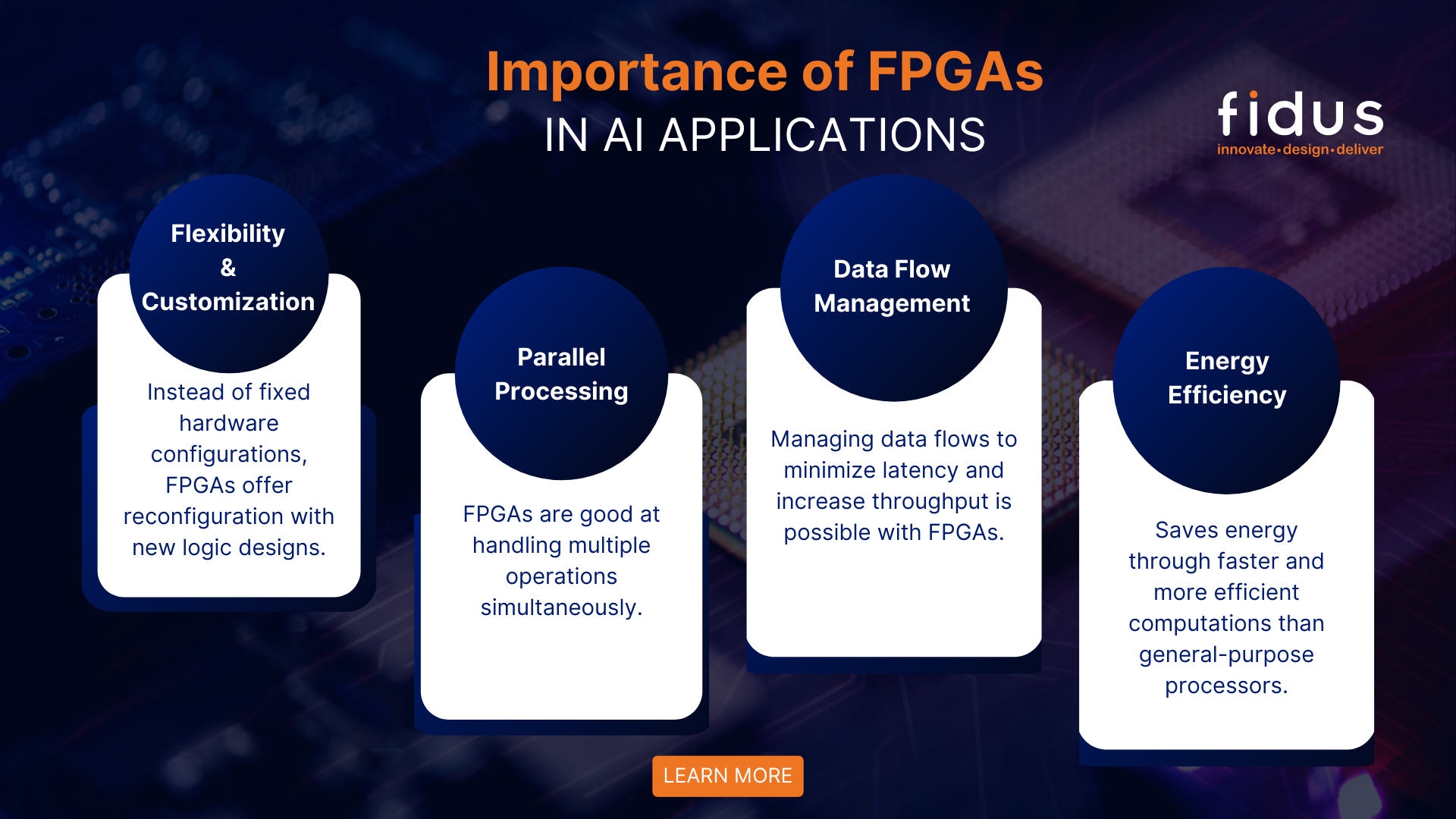

The core difference lies in their architecture. CPUs follow a sequential processing model, handling instructions one after another (or a few at a time). GPUs use a parallel processing approach, allowing them to perform many calculations simultaneously. FPGAs take this parallelism to the extreme, letting you create dedicated hardware pipelines that process data as it flows through the chip. In other words, they arent just doing things in parallel; theyre built for parallelism from the ground up.

So, if you need something incredibly specific, and you have the engineering chops to define it, then FPGAs might be the right call. They're like the custom-built race cars of the AI world — lightning fast, but requiring a skilled driver (and mechanic!).

FPGA In AI Accelerating Deep Learning Inference

Are FPGAs Better for AI? The Case For and Against

2. Speed, Power, and Adaptability

The big advantage that FPGAs offer for AI is performance. Because they can be customized to perfectly match the requirements of a specific AI algorithm, they can often achieve significantly higher speeds and lower power consumption compared to CPUs or GPUs. Think of it like this: a CPU or GPU is like using a Swiss Army knife to cut a piece of paper; it can do the job, but it's not the ideal tool. An FPGA, on the other hand, is like using a purpose-built paper cutter — it's much faster and more efficient.

Power efficiency is becoming increasingly important, especially in edge computing applications like self-driving cars and mobile devices. These applications need to perform AI tasks quickly and efficiently without draining the battery. FPGAs excel in these scenarios because their customizability allows them to minimize the amount of energy used for each calculation.

Another key advantage of FPGAs is their adaptability. AI algorithms are constantly evolving, and what works well today might be obsolete tomorrow. FPGAs can be reconfigured to support new algorithms and architectures, providing a level of flexibility that CPUs and GPUs simply can't match. It's like having a machine that can learn new tricks and adapt to changing circumstances.

However, it's not all sunshine and roses. The design process for FPGAs is quite involved. It demands experts who understand not only AI but also hardware design. This high barrier to entry means FPGAs are not suitable for every AI project.

The Downside

3. The FPGA Learning Curve

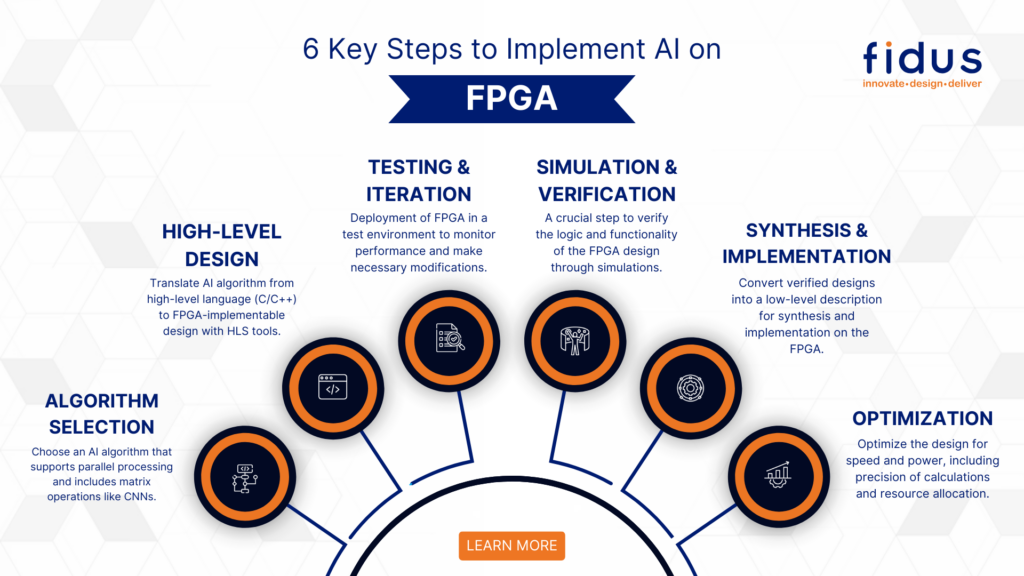

While FPGAs offer incredible potential, they also come with some significant challenges. The biggest hurdle is the complexity of programming them. Unlike CPUs and GPUs, which are programmed using high-level languages like Python and C++, FPGAs require specialized hardware description languages (HDLs) like Verilog and VHDL. These languages are much closer to the hardware level and require a deep understanding of digital circuit design.

Learning these languages and mastering the tools required to design and implement FPGA-based AI systems can be a steep and time-consuming process. It's not something you can pick up over a weekend. This complexity means that only specialized engineers and researchers with expertise in both AI and hardware design can effectively use FPGAs.

The development tools for FPGAs can also be expensive. The software used to design, simulate, and program FPGAs can cost thousands of dollars per license. This upfront cost can be a barrier to entry for smaller companies and individual researchers.

Also, debugging can be more involved. Finding and fixing errors in FPGA designs can be a real headache, often requiring specialized equipment and techniques.

Where FPGAs Shine

4. From Edge to Cloud

Despite the challenges, FPGAs are finding their niche in a growing number of AI applications. One area where they excel is in edge computing, where AI tasks are performed directly on devices rather than in the cloud. Examples include self-driving cars, drones, and smart cameras. In these applications, FPGAs' low power consumption and high performance make them an ideal choice.

Another area where FPGAs are making a significant impact is in accelerating specific AI algorithms. For example, FPGAs can be used to speed up the training of deep neural networks, which are used in a wide range of AI applications. By offloading computationally intensive tasks to FPGAs, companies can significantly reduce the time it takes to train these networks.

FPGAs are also being used in data centers to accelerate various AI workloads, such as image recognition, natural language processing, and fraud detection. Companies like Microsoft and Amazon have deployed FPGAs in their cloud infrastructure to provide faster and more efficient AI services to their customers.

However, its important to remember that FPGAs aren't a one-size-fits-all solution. For applications where flexibility and rapid prototyping are more important than raw performance, CPUs and GPUs may still be the better choice. The key is to carefully evaluate the specific requirements of your AI application and choose the hardware platform that best meets those needs.

FPGA Vs. ASIC For AI

The Future of FPGAs in AI

5. Emerging Trends and the Road Ahead

The future of FPGAs in AI looks promising. As AI algorithms become more complex and demanding, the need for specialized hardware like FPGAs will only increase. We are seeing a number of interesting trends in this area, including the development of new FPGA architectures that are specifically designed for AI applications.

One trend is the integration of FPGAs with other types of hardware, such as CPUs and GPUs. This allows developers to combine the strengths of different hardware platforms to create more powerful and versatile AI systems. For example, an FPGA can be used to accelerate the most computationally intensive parts of an AI algorithm, while a CPU or GPU handles the remaining tasks.

Another trend is the development of higher-level programming tools that make it easier to program FPGAs. These tools aim to abstract away some of the complexities of hardware design and allow developers to focus on the AI algorithms themselves. This could significantly lower the barrier to entry for FPGAs and make them accessible to a wider range of developers.

Ultimately, whether FPGAs become the dominant platform for AI remains to be seen. However, their unique combination of performance, power efficiency, and adaptability makes them a compelling choice for a growing number of AI applications. Keep an eye on this space — it's bound to get even more interesting in the years to come. The technology is constantly evolving, and who knows what the future holds?

FPGA In AI Accelerating Deep Learning Inference

Frequently Asked Questions (FAQs)

6. Your Burning Questions Answered

Here are some common questions people ask about FPGAs and their use in AI.

7. What skills do I need to work with FPGAs for AI?

You'll need a strong understanding of digital logic design, hardware description languages (like Verilog or VHDL), and AI algorithms. Familiarity with tools from vendors like Xilinx and Intel is also crucial.

8. Are FPGAs only for experts, or can beginners learn to use them?

While there's a steep learning curve, beginners can learn FPGAs. Start with basic digital logic concepts and gradually work your way up to more complex designs. Plenty of online resources and tutorials are available.

9. How do FPGAs compare to ASICs (Application-Specific Integrated Circuits) for AI?

ASICs are custom-designed chips for a specific task, offering the best possible performance and power efficiency. However, they're expensive and inflexible. FPGAs are a compromise: they offer good performance and power efficiency with more flexibility, but they're not as specialized as ASICs.