Amazing Tips About How Many WebSockets Can A Server Handle

Scaling Live Experiences Horizontal Vs Vertical For WebSockets

Understanding the WebSockets Landscape

1. What Exactly Are WebSockets?

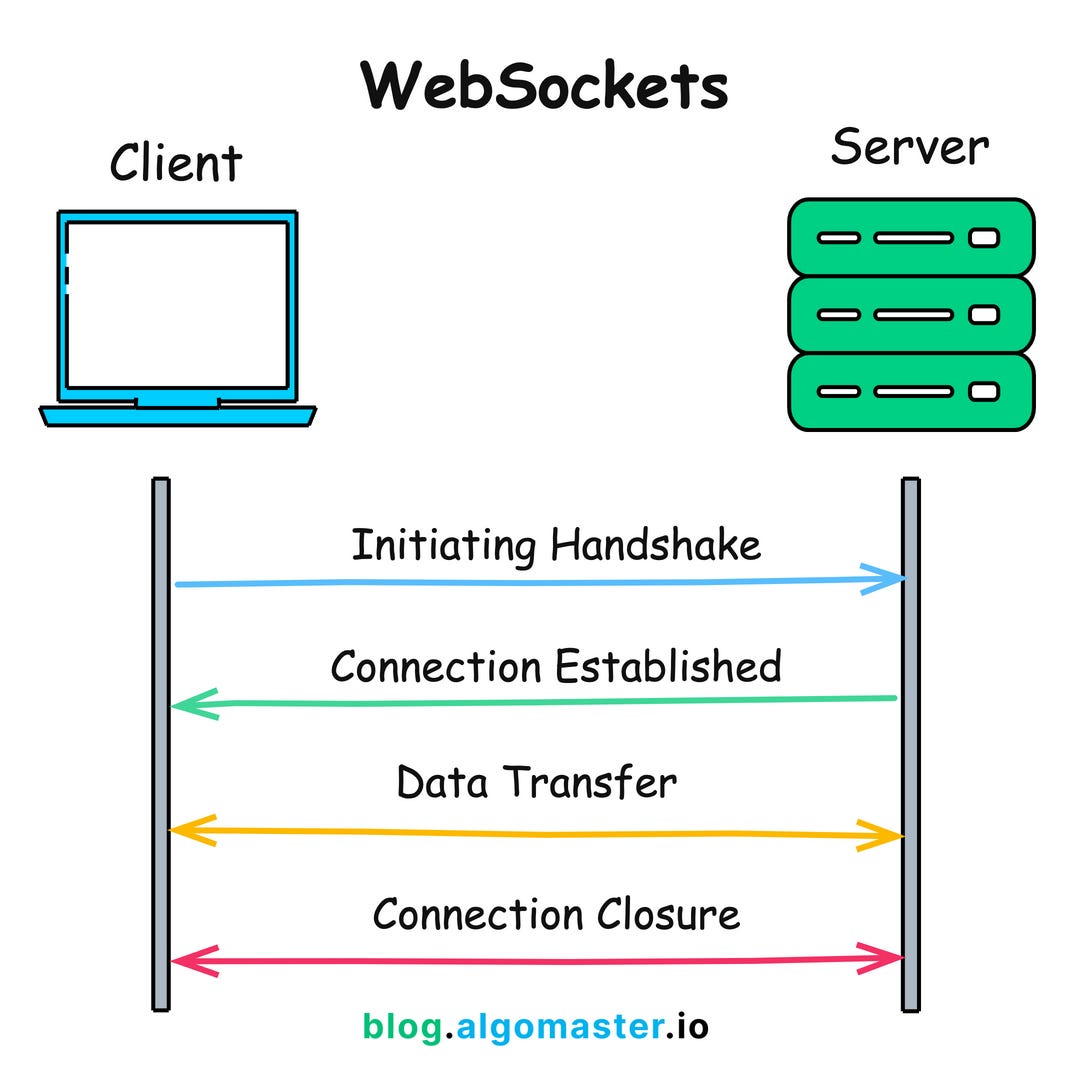

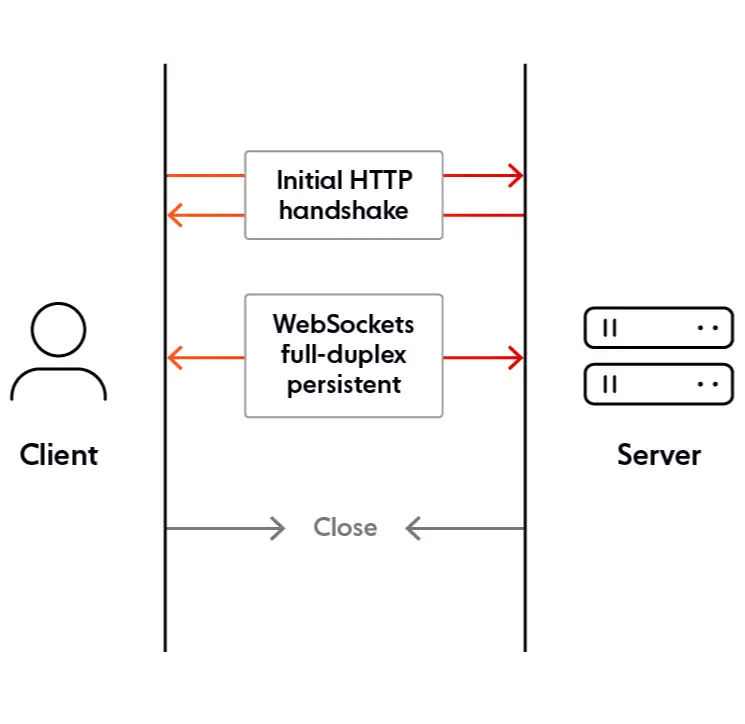

Ever wondered how some websites manage to deliver real-time updates, like live sports scores or chat messages, without you constantly hitting refresh? The secret sauce is often WebSockets. Think of traditional HTTP requests as a one-way conversation: you ask a question (request), and the server gives you an answer (response). WebSockets, however, establish a persistent, two-way communication channel. It's like having a dedicated phone line open between your browser and the server, allowing both sides to send messages back and forth instantly. No more waiting for a reply!

This persistent connection makes WebSockets ideal for applications that require constant data exchange, such as online gaming, collaborative editing tools (think Google Docs), and financial trading platforms. They offer a significant performance boost over traditional HTTP polling techniques, which involve repeatedly asking the server for updates, even when nothing has changed. WebSockets provide a more efficient and responsive user experience, making them a crucial technology for modern web development.

So, imagine you're building a super cool chat application. With WebSockets, when someone sends a message, it pops up instantly for everyone in the chatroom. Without WebSockets, you'd have to constantly check for new messages, which is like repeatedly asking, "Anything new? Anything new?" It gets old fast!

WebSockets are especially useful in scenarios where latency needs to be kept to an absolute minimum. Consider a multiplayer online game where players are interacting in real time. Any lag in communication could disrupt the gameplay and negatively impact user experience. WebSockets allow for instant updates to be communicated with the server, keeping the game running smooth and enjoyable.

2. Why This "How Many WebSockets?" Question Matters

Okay, so WebSockets are awesome. But heres the thing: servers have limitations. They can only handle so much traffic and so many connections simultaneously. The question of "How many WebSockets can a server handle?" is critical because it directly impacts the scalability and performance of your application. Imagine throwing a massive party and realizing your house can only fit a fraction of the guests. Not ideal, right?

If your server can't handle the number of WebSocket connections required, users will experience lag, disconnections, and generally poor performance. This can lead to a frustrating user experience and, ultimately, impact the success of your application. Knowing the limits of your server allows you to plan for scaling, optimize your code, and choose the right infrastructure to support your application's needs. Think of it as knowing how many pizzas to order for your party so everyone gets a slice (or two!).

Furthermore, understanding WebSocket capacity is crucial for cost management. Running more servers than necessary is a waste of resources and money. By accurately estimating the number of WebSockets each server can handle, you can optimize your infrastructure and reduce operational expenses. This is like knowing exactly how many pizzas you need to order, so you don't end up with a fridge full of leftovers that nobody eats.

In essence, figuring out the capacity of WebSockets your server can handle is a critical task. It's not just about technical performance. It's about ensuring a smooth user experience, controlling costs, and scaling your application effectively. So, let's dive deeper into the factors that influence this capacity and how you can optimize your setup to handle more connections.

What Are WebSockets And Why They Used?

Factors Affecting WebSocket Capacity

3. Hardware Resources

Just like a gaming PC needs a powerful processor and plenty of RAM to run smoothly, your server's hardware resources play a significant role in determining how many WebSockets it can handle. The CPU is responsible for processing the data transmitted over the connections, while RAM provides the memory needed to manage those connections. A server with a beefy CPU and ample RAM will naturally be able to handle more concurrent WebSockets than one with limited resources.

Think of it like this: the CPU is the chef in a busy restaurant, and RAM is the pantry where the ingredients are stored. If the chef is slow or the pantry is small, they won't be able to prepare many dishes at once. Similarly, a slow CPU or limited RAM will bottleneck your server's ability to handle WebSocket connections. Regularly monitoring CPU utilization and memory usage is critical to prevent overloading and ensure optimal performance.

Disk I/O can also play a role, although generally less significant than CPU and RAM for WebSockets. However, if your application involves logging WebSocket data or storing session information on disk, faster disk I/O can help improve performance. Using solid-state drives (SSDs) instead of traditional hard disk drives (HDDs) can make a noticeable difference in these scenarios.

Beyond CPU and RAM, consider the network bandwidth available to your server. WebSockets require a persistent connection, and each connection consumes bandwidth. If your server's network connection is saturated, it won't be able to handle as many concurrent WebSockets. Monitoring network traffic and ensuring you have sufficient bandwidth is crucial for maintaining a smooth user experience. It's like having enough lanes on a highway to prevent traffic jams during rush hour.

4. Operating System Limits

Your operating system (OS) imposes its own set of limits on the number of open files and network connections a process can handle. WebSockets, being persistent network connections, are subject to these limitations. By default, many operating systems have relatively low limits on the number of open files, which can restrict the number of WebSockets your server can handle concurrently.

The "ulimit" command on Linux-based systems is used to set limits on the resources available to processes. You can use this command to increase the maximum number of open files allowed for your server process. Similarly, on Windows, you can adjust the registry settings to increase the number of available network ports. Failing to adjust these limits can lead to your server running out of resources and being unable to accept new WebSocket connections.

In addition to file limits, the OS also imposes limits on the number of ephemeral ports available for outgoing connections. When your server initiates a connection to another server (e.g., for proxying or external services), it uses an ephemeral port. If your server is making a large number of outgoing connections, it can exhaust these ports, leading to connection failures. Tuning the ephemeral port range and timeout settings can help prevent this issue.

Choosing the right operating system for your server can also impact its ability to handle WebSockets. Linux-based systems, particularly those with optimized network stacks, are often preferred for high-performance WebSocket applications due to their scalability and flexibility. However, Windows Server can also be a viable option, especially when paired with optimized WebSocket libraries and proper configuration.

5. WebSocket Implementation and Server Software

The choice of WebSocket implementation and server software can have a significant impact on performance. Different libraries and frameworks offer varying levels of optimization and efficiency. Some implementations are designed for speed and scalability, while others prioritize ease of use and developer productivity. Selecting the right tools for the job is crucial for maximizing the number of WebSockets your server can handle.

For example, Node.js with libraries like `ws` or `socket.io` is a popular choice for building WebSocket servers due to its non-blocking, event-driven architecture. This allows Node.js to handle a large number of concurrent connections efficiently. Other options include Python with libraries like `websockets` or Java with frameworks like Spring WebSockets.

The server software you choose also plays a role. Nginx, Apache, and other web servers can be configured to proxy WebSocket connections to your application server. Optimizing the configuration of these web servers is essential for handling a large number of concurrent WebSockets. This includes tuning parameters like the maximum number of connections, keep-alive timeouts, and buffer sizes.

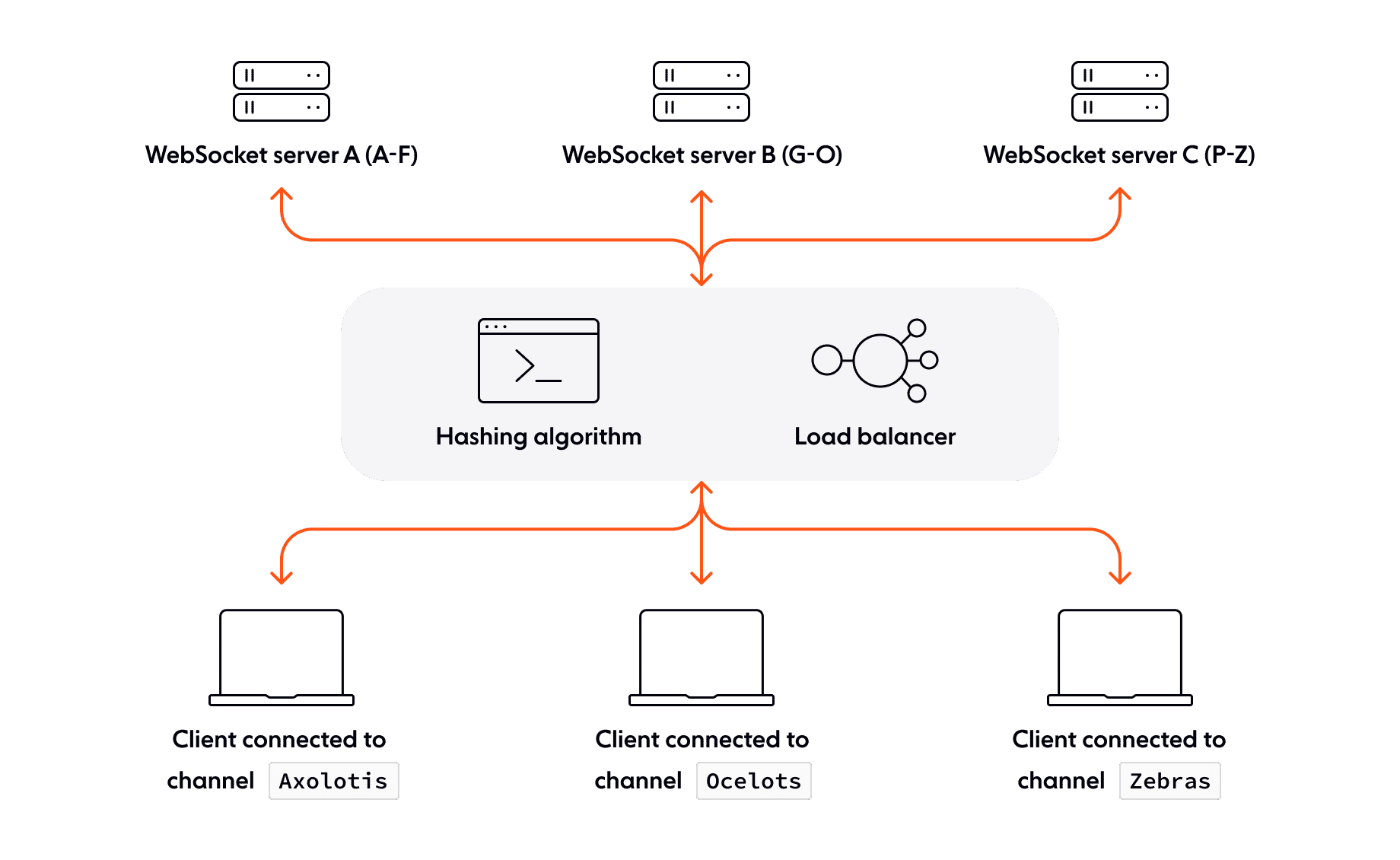

Load balancing is another important consideration. Distributing WebSocket connections across multiple servers can help improve scalability and prevent any single server from becoming overloaded. Load balancers can use various algorithms to distribute traffic, such as round-robin or least-connections, depending on your application's requirements. By spreading the load across multiple servers, you can significantly increase the overall number of WebSockets your application can handle.

WebSockets Vs ServerSent Events Key Differences And Which To Use In 2024

Estimating WebSocket Capacity

6. Benchmarking and Load Testing

The most reliable way to determine how many WebSockets your server can handle is through benchmarking and load testing. This involves simulating a realistic workload by creating a large number of concurrent WebSocket connections and measuring the server's performance. Monitoring key metrics such as CPU utilization, memory usage, network latency, and connection success rate can help you identify bottlenecks and determine the point at which the server starts to degrade.

Tools like Apache JMeter, Gatling, and Locust can be used to create and manage the load testing process. These tools allow you to define the number of concurrent users, the rate at which new connections are established, and the duration of the test. By gradually increasing the load, you can observe how the server responds and identify the maximum number of WebSockets it can handle before performance starts to suffer. Analyzing the test results can help you pinpoint areas for optimization and ensure your server can handle the expected workload.

When designing your load tests, it's important to simulate real-world usage patterns as closely as possible. This includes sending realistic messages over the WebSocket connections and varying the message size and frequency. Consider factors such as peak usage times and the expected number of concurrent users to create a realistic load profile.

Remember that load testing is not a one-time activity. As your application evolves and the number of users grows, it's essential to repeat the load testing process periodically to ensure your server can still handle the workload. Monitoring your server's performance in production and analyzing real-world usage data can also help you identify potential bottlenecks and proactively address them before they impact your users.

7. Mathematical Modeling (A Rough Estimate)

While benchmarking provides the most accurate results, mathematical modeling can offer a rough estimate of your server's WebSocket capacity. This approach involves estimating the resources required per WebSocket connection and then calculating the maximum number of connections the server can support based on its available resources. This is more of a "back of the envelope" calculation, but can be useful for initial planning.

For example, you can estimate the amount of memory required per WebSocket connection by monitoring memory usage while a small number of connections are active. You can also estimate the CPU overhead per connection by measuring CPU utilization during a similar test. Once you have these estimates, you can calculate the maximum number of connections the server can handle based on its total memory and CPU capacity. Keep in mind that this approach is a simplification and doesn't account for all the factors that can impact performance.

It's crucial to remember that mathematical modeling is just an estimate and should be validated with actual load testing. Many factors can influence the actual performance of a WebSocket server, including network latency, message size, and the complexity of the application logic. Relying solely on mathematical modeling without conducting load tests can lead to inaccurate estimates and potentially over- or under-provisioning your infrastructure.

Despite its limitations, mathematical modeling can be a useful tool for initial planning and resource allocation. It can help you get a rough idea of the scale of infrastructure required to support your WebSocket application and identify potential bottlenecks early in the development process. However, always prioritize benchmarking and load testing to validate your estimates and ensure your server can handle the expected workload.

An Introduction To WebSockets Kodeco

Optimization Techniques for Handling More WebSockets

8. Connection Pooling and Multiplexing

Connection pooling and multiplexing are techniques that can help improve the efficiency of WebSocket connections. Connection pooling involves reusing existing connections instead of establishing new ones for each request. This can reduce the overhead associated with connection setup and teardown, leading to improved performance. Multiplexing allows multiple logical connections to share a single physical connection, further reducing overhead and improving resource utilization.

HTTP/2 supports multiplexing at the protocol level, allowing multiple HTTP requests and responses to be transmitted over a single TCP connection. This can significantly improve performance for applications that use WebSockets over HTTP/2. However, not all WebSocket implementations support HTTP/2 multiplexing, so it's important to choose a library or framework that does if you want to take advantage of this feature.

Connection pooling can be implemented at the application level or by using a dedicated connection pooling library. When a client requests a WebSocket connection, the server first checks the connection pool to see if there's an available connection. If so, it reuses the existing connection instead of creating a new one. When a connection is no longer needed, it's returned to the pool for reuse.

By reducing the overhead associated with connection management, connection pooling and multiplexing can significantly increase the number of WebSockets your server can handle. These techniques are particularly effective for applications that experience a high volume of short-lived WebSocket connections.

9. Asynchronous I/O and Non-Blocking Operations

Asynchronous I/O and non-blocking operations are essential for building high-performance WebSocket servers. Traditional synchronous I/O operations block the execution of a thread until the operation completes. This can lead to performance bottlenecks when handling a large number of concurrent WebSocket connections. Asynchronous I/O allows the server to perform other tasks while waiting for I/O operations to complete, improving overall responsiveness and throughput.

Non-blocking operations return immediately, even if the operation hasn't completed yet. The server can then use callbacks or promises to handle the results of the operation when it's finished. This allows the server to handle multiple WebSocket connections concurrently without blocking any single thread.

Many modern programming languages and frameworks provide built-in support for asynchronous I/O and non-blocking operations. Node.js, for example, is designed around an event-driven, non-blocking I/O model. This makes it well-suited for building high-performance WebSocket servers. Other languages like Python and Java also offer asynchronous I/O libraries and frameworks.

By using asynchronous I/O and non-blocking operations, you can significantly improve the scalability and performance of your WebSocket server. This allows you to handle a larger number of concurrent connections without sacrificing responsiveness or throughput.

10. Optimized Data Serialization and Compression

The way you serialize and compress data transmitted over WebSocket connections can significantly impact performance. Using efficient serialization formats and compression algorithms can reduce the amount of data that needs to be transmitted, improving bandwidth utilization and reducing latency.

JSON is a popular serialization format for WebSockets, but it can be verbose and inefficient, especially for large data structures. Binary serialization formats like Protocol Buffers or Apache Avro can offer significant performance improvements over JSON, particularly when transmitting complex data structures. These formats are more compact and can be parsed more quickly, reducing the overhead associated with serialization and deserialization.

Compression can also help reduce the size of data transmitted over WebSocket connections. The DEFLATE algorithm is commonly used for compressing data, but other algorithms like Brotli can offer even better compression ratios. Enabling compression on your WebSocket server can significantly reduce bandwidth usage and improve overall performance, especially for applications that transmit large amounts of data.

By optimizing data serialization and compression, you can reduce the overhead associated with data transmission and improve the overall performance of your WebSocket server. This allows you to handle a larger number of concurrent connections and provide a smoother user experience.

What Are WebSockets?